Physics Execution Workflow with Open DRM and Detached Controller Host | ||||

|

| |||

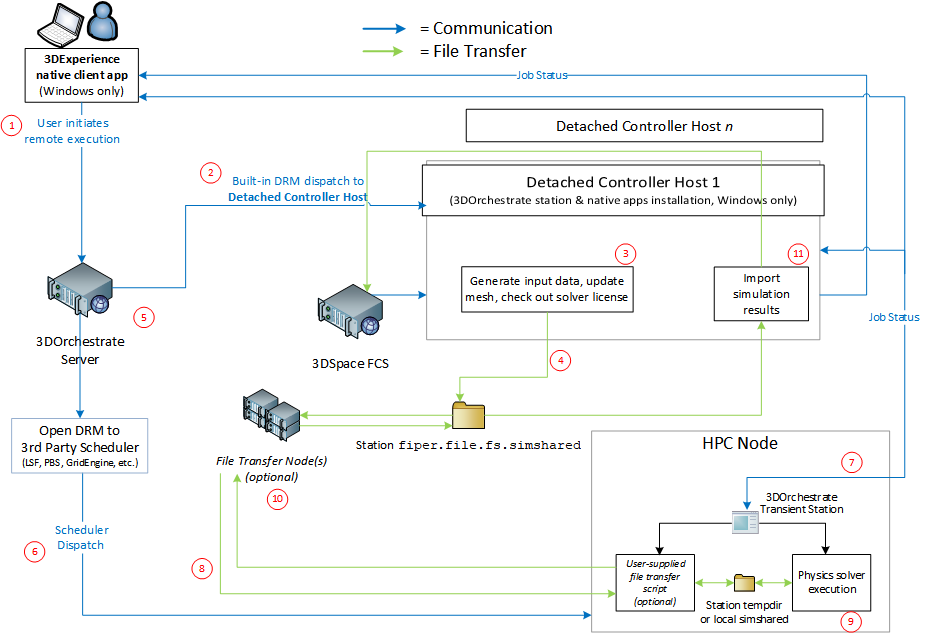

This system requires one or more Detached Controller Host stations. The physics solvers execute on the HPC (High Performance Computing) nodes by means of a 3DOrchestrate transient station (SMAExeTranstation).

These simulations include Abaqus solver FEA simulations submitted from the physics apps—Linear Structural Validation, Structural Scenario Creation, Mechanical Scenario Creation, Fluid Scenario Creation, etc.—as well as topology (shape) optimizations and parametric optimizations submitted from the Functional Generative Design (GDE) app. After you have configured the network infrastructure, these remote solver jobs execute automatically through a built-in simulation process.

The diagram below shows the numbered sequence of actions that occur through the system when an end user submits a simulation for execution on an HPC node.

Icons are © Microsoft Corporation

|

End user submits the simulation for remote execution from the 3DEXPERIENCE native client app. If 3DOrchestrate run-as security is enabled, the user enters username/password OS credentials for Windows and/or Linux. |

|

Detached Controller Host is a Windows computer with the

|

|

Detached Controller Host generates solver input data, updates mesh, and checks out solver license. |

|

Solver inputs are written to the shared folder specified in

the

|

|

DRM job scheduler inputs received from open DRM custom UI in client app are sent to the open DRM submission script (as command-line arguments). Submission script runs on the 3DOrchestrate server. If run-as security is enabled, the command runs using the end-user-provided OS credentials. On Linux, the command runs via a setuid or sudo SMAExePlaunch binary which validates the credentials using the authentication mechanism configured for the operating system. The command defined by the open DRM server property

|

|

DRM job scheduler dispatches the execution job to an HPC (High Performance Computing) node. |

|

When the DRM scheduler dispatches the job to an HPC node(s), a 3DOrchestrate transient station starts (only on the primary node). The transient station is passed a workitem ID as part of its command-line arguments. When the transient station starts, it claims that workitem from the 3DOrchestrate server and then uses its adapters to execute the process. TCP socket communication occurs between the transient station

machine (HPC node) and the Detached Controller Host station machine. This

communication occurs over the ports defined by the station property

|

|

(Optional) You can use a custom file transfer script for your open DRM system; see Custom File Transfer Script. In this case, the

|

|

The HPC nodes must have the

|

|

(Optional) Custom file transfer script pushes results back to

the station

|

Configuring a Detached Controller Host Station

One or more Detached Controller Hosts must be configured to achieve the complete physics execution system described above.

A Detached Controller Host must have the

NativeApps_3DEXP media installed—this includes the

native (rich client) simulation apps as well as a public (regular)

3DOrchestrate

station. In the station properties file, you must specify the location of the

native apps installation in the following property:

fiper.station.sim.clientservices=

You must also set the following station property to open TCP/IP ports for communication between the executables running on different machines.

fiper.station.sim.portrange=

You can specify a port number range such as 56000-57000, a comma-separated list of individual ports numbers such as 56010,56012,56050, or a combination of both. If this property is not set, the simulation executables will use any available open ports.

In the complete physics execution system, the Detached Controller Host performs the following operations: generate solver input data, update mesh, and check out solver license. The Detached Controller Host does not run the physics simulation solvers—that happens on the DRM-HPC compute nodes.

The Detached Controller Host must make use of a shared disk/file

system on your local area network. This folder/directory must be specified in

the

3DOrchestrate

station property

fiper.file.fs.simshared:

fiper.file.fs.simshared=$SHARED_DRIVE

You must either define the environment variable SHARED_DRIVE on the Detached Controller Host or set the property to an absolute path; for example, the Windows UNC path \\server1\sharedsim\.

The

simshared directory exists as a waypoint between the

Windows Detached Controller Host and the remote HPC compute node (either Linux

or Windows). When executing a physics simulation or topology (shape)

optimization (from the

Functional Generative Design

app), the Detached Controller Host puts the solver input files in the

simshared directory where they are visible to the HPC

compute node.

Sizing Considerations for a Detached Controller Host

The memory requirements for your Detached Controller Host station(s) depend on the number of concurrent jobs that will be executing. Consider the following issues when planning the computer that will be a Detached Controller Host station for 3DOrchestrate:

- One station and substation per user—each station can consume up to 2 GB RAM by default.

- The station continues running for the duration of the simulation—it handles mesh update, export of model to disk, license checkout, and import of model to 3DSpace.

-

Sample memory requirements for mesh generation:

Peak Memory (KB) # Elements # Nodes 2,387,096

86,433

382,597

3,764,216

376,044

1,767,483

4,110,944

470,283

2,221,154

- Meshing is single threaded per work item (one core is used)

- The station property

fiper.station.concurrencycontrols the number of concurrent work items. See Concurrency Limit.